Every day, knowledge workers receive a deluge of information that is critical to their work. Company-issued documents, market research, and news all contain insights required to make the best possible decisions. And because of the sheer amount of content, it’s not realistic to read everything, and some key information may be missed.

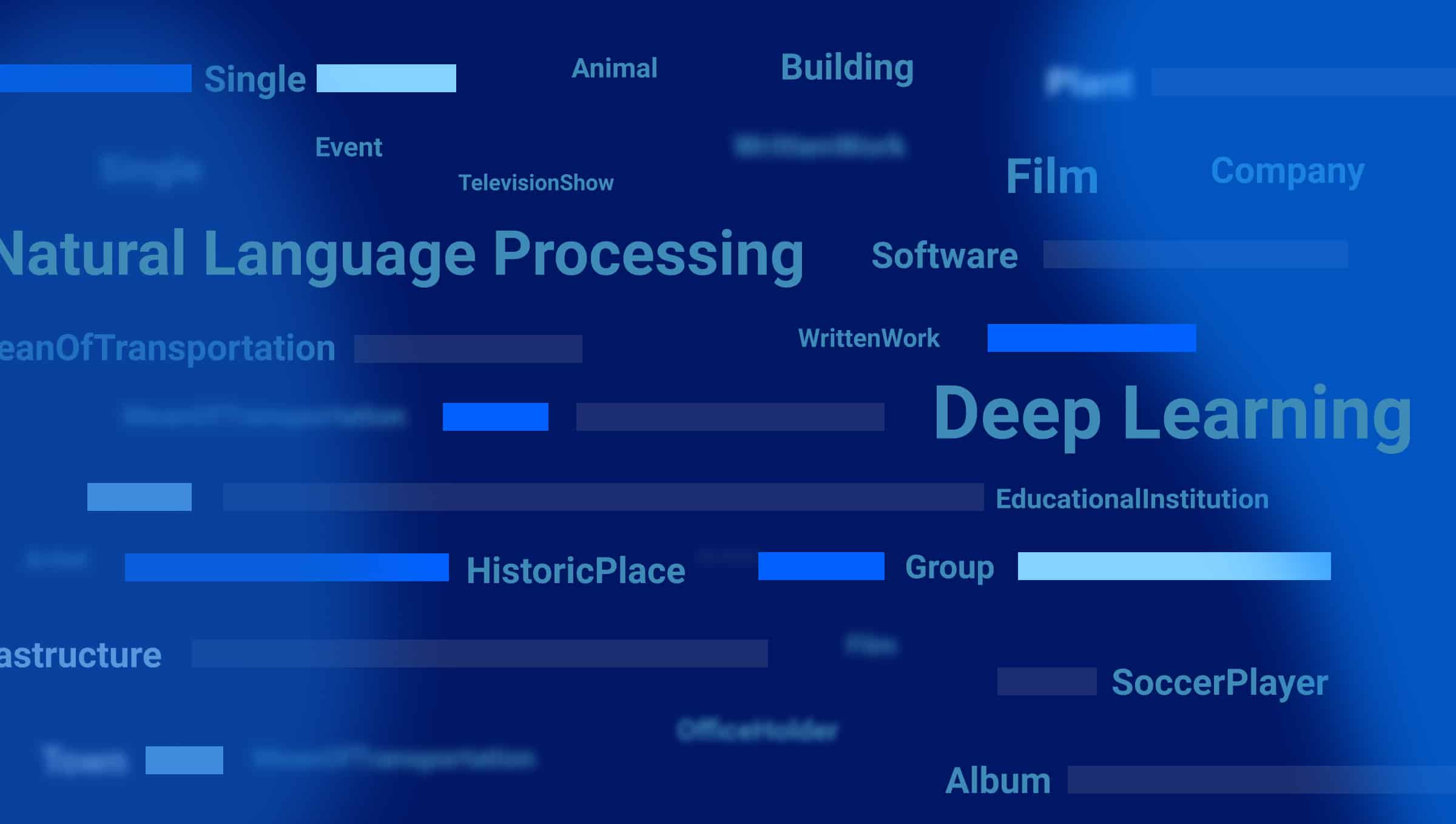

However, recent advances in Natural Language Processing (NLP) and Deep Learning (DL) research allow for automated extraction and summarization of information from documents and help humans process overwhelming information more efficiently. It’s part of the AI technology that powers AlphaSense (the AlphaSense Language Model). As a result, we’re constantly looking for ways to improve the technology further to empower our users.

The AlphaSense AI research team collaborated with Carnegie Mellon University to explore novel text summarization methods, understand current challenges, and apply what we learned to next-generation algorithms to more effectively summarize financial documents.

This research focuses on aspect-based, multi-document summarization to generate summaries organized by specific aspects of interest in multiple domains. Such outlines can help improve efficient analysis of text, such as quickly understanding reviews or opinions from different angles and perspectives.

In AlphaSense, imagine a user searching for ‘737 max’. At the peak of the crisis at Boeing, there were thousands of new and relevant documents flowing through the platform every week. A useful summarizer might extract key points from those documents and organize them based on terms (designated as aspects for our research and this article) like:

- impact on the business

- regulatory risk

- potential litigation

- reaction of airlines

- CEO commentary

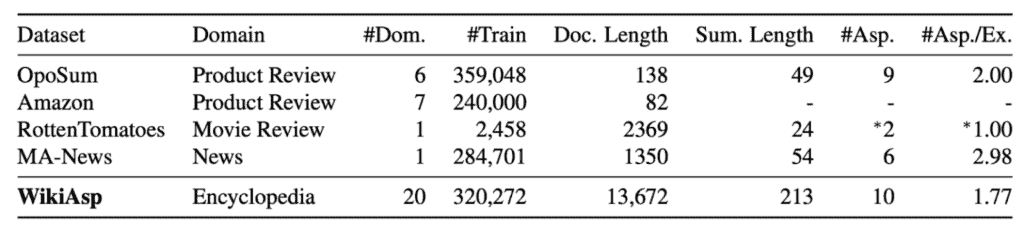

As a result of our research, we have released a multidomain aspect-based summarization dataset, WikiAsp, to advance the research in this direction. The baseline evaluation and insights gained on the WikiAsp dataset are summarized in the recently published paper “WikiAsp: A Dataset for Multi-domain Aspect-based Summarization,” which appears in the Transactions of the Association for Computational Linguistics, a publication by MIT Press.

Aspect-Based Summarization

WikiAsp.

Aspect-based summarization is a task that aims to provide targeted summaries of a document from different perspectives and elements of the document that are considered depending on the context of a given document. For example, when looking at a TV product review, there are different aspects to consider — image, sound, connectivity, price, and more (see some examples in the OpoSum dataset.) Furthermore, the elements for summarization vary for different domains, while the development of previous models has tended to be domain-specific. This research aims to apply a generic method to summarize documents from varying disciplines and with multiple aspects.

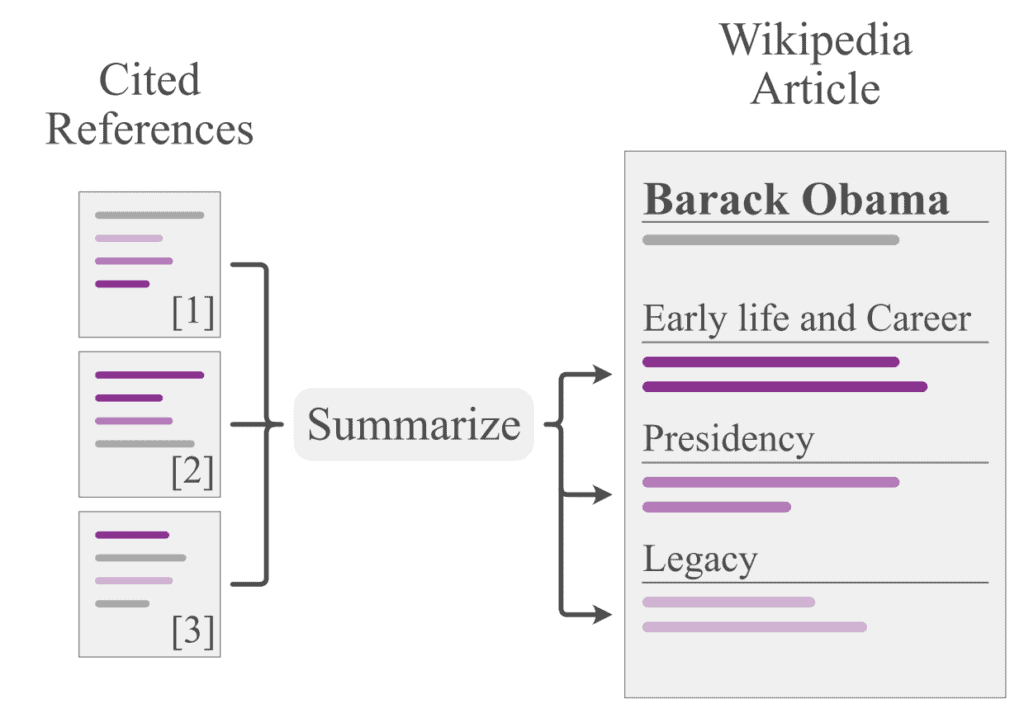

For this purpose, we built an open-source dataset from Wikipedia articles called WikiAspect or WikiAsp, for short. Wikipedia is an easily accessible source of multidomain multi-aspect summary content, serving as a very serviceable dataset as it includes articles across many domains. Its sectional structures, i.e., each piece’s section boundaries and titles form natural annotations of aspects and the corresponding text. Furthermore, Wikipedia articles require that a document’s content is verifiable from a set of references, so citations should contain the majority of the information in the articles. In WikiAspect, we use cited references of a Wikipedia article as input context and construct sets of “aspects” from its section titles through automatic extraction, curation, and filtering steps. The section texts then serve as corresponding aspect-based summaries.

Baseline

Baseline

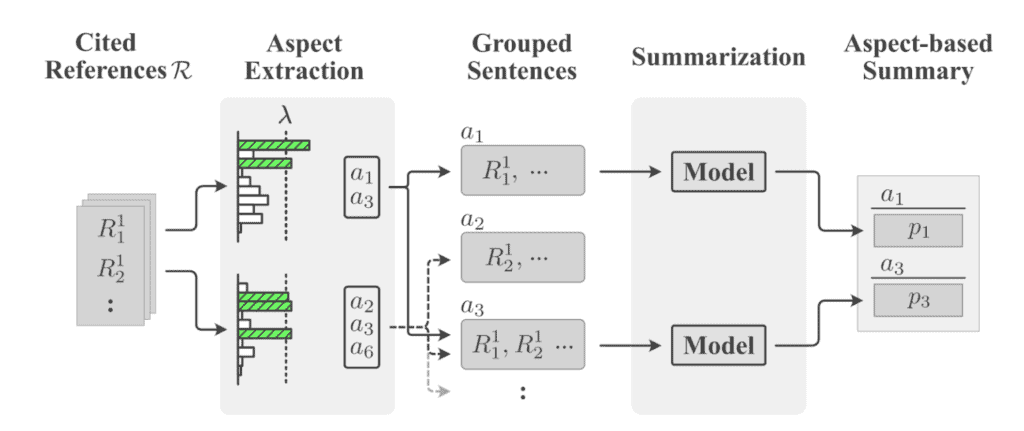

After collecting the WikiAsp dataset, we wanted to gain more insights into aspect-based summarization to identify significant challenges and potential solutions. For this purpose, we experiment with baseline methods on WikiAsp. As a result, we devised a two-stage approach: aspect discovery and aspect-based summarization, as shown in the below figure.

The first stage is aspect discovery which classifies sentences in cited references to aspects. We use a fine-tuned ROBERTa model for the aspect discovery, which is capable of predicting the elements for a given domain and the general relevance of the sentences in the first place.

The second stage is aspect-based summarization. There are two types: extractive overview, which selects important content from the original text as a summary, and abstractive outline, which generates new text. An extractive method (TextRank) and an abstractive method (BERTSum) are tested on the labeled and grouped sentences to perform supervised aspect-based summarization.

First, there are imbalanced training samples among aspects, so the aspect classification has high recall but low precision. This means that we need to balance the training of different parts carefully. Second, we use the ROUGE score, which measures the overlap between generated summaries and the ground truth, to evaluate the summarization quality. We find that both baselines abstractive and extractive methods have low ROUGE scores, see the Experiments. This shows that the multidomain/aspect summarization problem is a challenging task requiring advanced algorithms.

We also observe that any aspects requiring summarizing contents in a particular temporal order (e.g., time-series events) adds extra difficulty because of the need to correctly order scattered and possibly duplicate pieces of information from different sources. Certain domains that involve interviews or quotes from people also exhibit challenges incorrectly modifying pronouns based on the relationship to the topic of interest.

Future Steps

Through this research, we built WikiAsp, a large-scale, multidomain multi-aspect summarization dataset derived from Wikipedia. WikiAsp invites a focus on multiple domains of interest to investigate and solve some of the various problems in text summarization and provides a testbed to develop and evaluate new text summarization algorithms. Through our initial benchmarking, we identified challenges to address in future work and research.

As the next step, we plan to apply this two-stage method to summarize financial documents, where it is common to see multiple domains and multiple aspects. Although the fully automated algorithmic summarization at a domain expert level still has a long way to go, we can leverage some of the developments from this work. For example, we plan to use abstractive summarization methods to highlight important aspects of a company and associated sentences in an earnings call, so readers can quickly understand what is happening with the company without reading an entire earnings call transcript.

Acknowledgment

This work is based on the fruitful collaboration between the AI research team, Professor Graham Neubig, and Ph.D. student Hiroaki Hayashi.

Baseline

Baseline ![[Press Release] AlphaSense Acquires Sentieo 12 AlahaSense Sentieo Acquisition](https://www.alpha-sense.com/wp-content/uploads/2022/05/AS-Blog-AcquisitioN-ASSentieo-250x150.png)