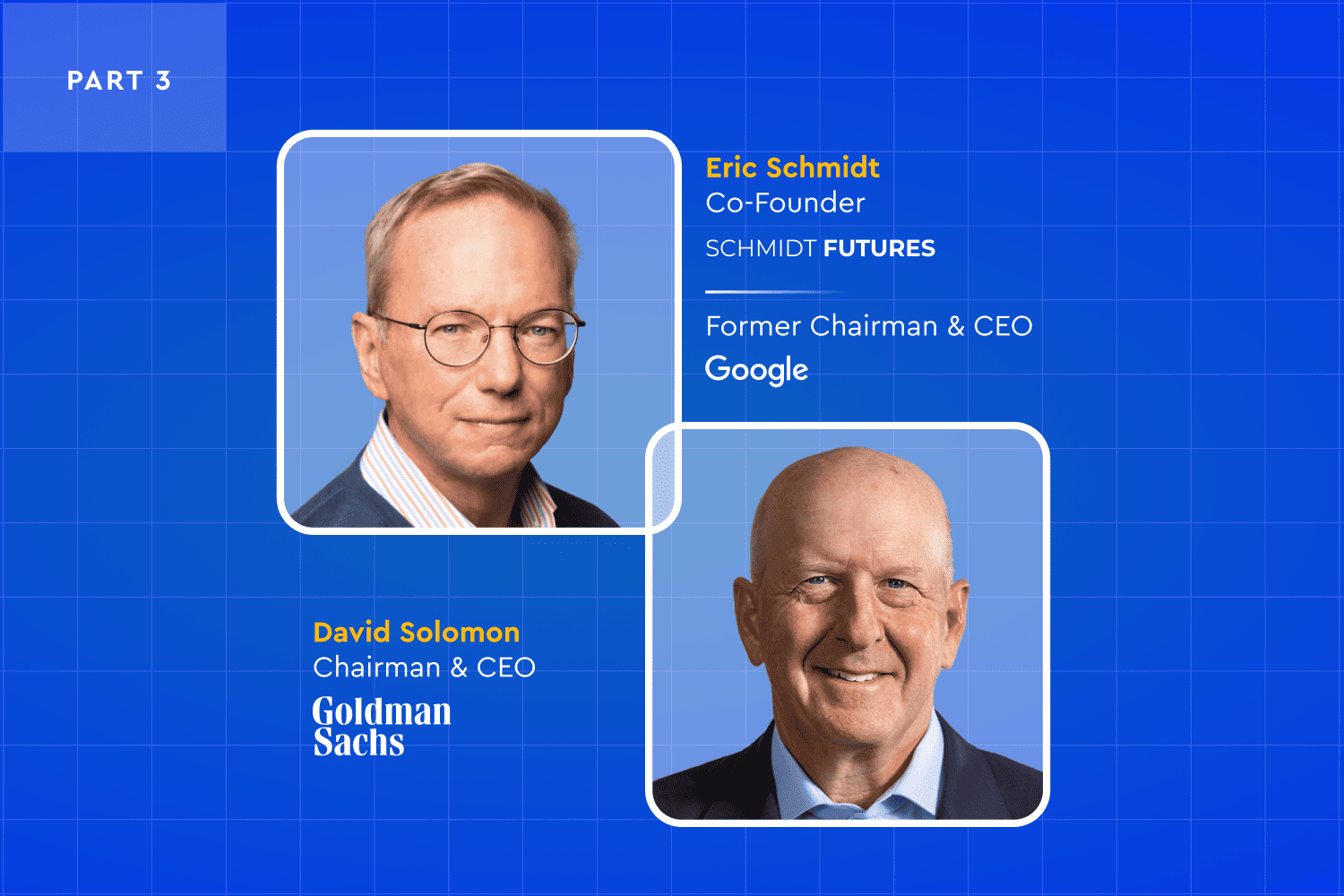

AlphaSense has remained dedicated to its mission of revolutionizing the way professionals make critical business decisions through the transformative capabilities of AI. As we cast our gaze towards an AI-empowered future, we spoke with two luminaries from the tech and financial spheres: Eric Schmidt, Co-Founder of Schmidt Futures and Former CEO & Chairman of Google, and David Solomon, Chairman and CEO of Goldman Sachs. Together, we delve into the unfolding capabilities of generative AI (genAI), its rapid proliferation, and the potential it carries to solve some of the world’s greatest challenges.

Below, Schmidt and Solomon explore the broader realm of genAI and its profound ability to impact both commerce and society on a grand scale. Some of the pressing topics they tackle include: the industries most poised for disruption, the unraveling of uncertainties and risks associated with an over-reliance on genAI, and the areas of opportunity for this tech to solve global problems.

For a comprehensive dive into these two great minds, the complete video conversation is accessible at alpha-sense.com/genai.

Solomon: You know, there are a lot of problems in the world today that seem unsolvable. Are there some really, really big problems you think AI can accelerate our curve to solve?

Schmidt: Climate change.

If you assume nuclear and climate are the two existential threats to the world, the nuclear problem is not solvable by AI but the climate one is. There are so many people working on scientific solutions, new materials, new energy sources, new energy distributions, new energy algorithms, that AI will be central to solving climate change. Without AI, we won’t get there. With AI, we have a chance.

Solomon: Advances in technology that are even hard to imagine now have to be the solution to that problem.

Schmidt: If I told you we shouldn’t invent the telephone because the telephone will be used by criminals, you’d say, “What an idiot.” And yet let’s say the telephone was just about to be invented and you said, “This is brilliant,” and I said, “Well, it’s going to get misused. Stop.”

We have a long history of the development of dual-use technologies. Nuclear being a very dangerous example, which ultimately was beneficial and harmful at the same time. The truth is that AI will be enormously beneficial. Healthcare, education, the discovery of drugs to solve problems that have bedeviled humans for thousands of years, eliminating diseases, inequality, all of these things are possible.

It’s also possible it will give asymmetric power to evil people. If you think about the Japanese assault with sarin 20 years ago on the subway, how much knowledge do you actually have to have to recreate that? And how much of that knowledge is now available?

When I was in college a long time ago, an undergraduate managed to design a plutonium bomb from open-source material. This was before the internet.

Solomon: Wow.

He submitted his thesis and they said, “We have to classify it.” Then they had this basic problem of nobody being able to read it because it was classified. They ultimately decided that, if you have a classified undergraduate thesis, we’ll just give you your degree.

So the fact that open-source information can be combined by clever people is not a new fact. This was 50 years ago, however, what is new now is the ease of which the access can be presented right in front of you.

Solomon: You touched a little bit on regulation. Do you have some ideas as to what that regulatory construct should look like? Or how that regulatory construct should evolve?

Schmidt: I do. So the first thing I’d like to say is everyone has a complaint about the internet. I have mine. You have yours. Everyone has them.

There are many people who proposed a division of internet regulation. I cannot imagine how you’d build it, fund it, write it, tell it what to do, tell it what its powers are. I think it’s highly unlikely that there’ll be an internet regulatory body in any country that’s a democracy because democracies are just too complicated, which is the core of democracy.

We should focus on extreme and existential risks. The new problem is that frontier and that the open-source models can be misused at scale. The frontier models, the big ones, they’re all going to get regulated. They’re highly visible and they’re accountable. The government is going to pay attention. The people who are running them essentially are asking for regulation because of the potential downside dangers.

The hardest problem is how do you regulate open source? Open source here means you release the code and all the numbers. These are the numbers that cost $100 million to calculate.

So if you were to just release those numbers, then an evil person/company/country could easily replicate all of that information and misuse it in a really bad way.

Solomon: I want to shift gears, and I want to talk about AI and geopolitics. The US government is obviously changing the landscape around semiconductors and semiconductor exports around the world. How is that going to reshape global competition around these technologies?

Schmidt: If you do a world survey, Europe is now in the process of writing regulations that will make it almost impossible to do the things that I’m talking about. This is a mistake.

Britain has an Online Safety Bill, which is not as bad but is also highly regulatory. That may or may not hurt them. China has AI ethics and AI rules, which effectively make it impossible to use LLMs without a lot of censorship. You can’t answer a question about Tiananmen Square.

America, for all of our faults, looks like we have a chance to move quickest. My scoring is that the US and the UK are basically ahead. Europe is going to be behind and will remain behind unless something changes. China is at least three years behind, partly because they didn’t get into the space due to fear of regulation and another because of the lack of Chinese language information.

Finally, the impact of these chip curves. On October 7th of last year, the Biden administration announced restrictions on exports of high-speed chips. There’s a lot of evidence that those have hurt China, at least for a while. China is working hard to catch up. They’re trying to do their training outside the country. They’re trying to evade the rules in ways that all make perfect sense, but it’s going to be very hard for them to catch up.

Solomon: How about some of the geopolitical swing states? I mean, Abu Dhabi has released a 40 billion parameter Falcon model. What kind of a role does that part of the world play in all this?

Schmidt: I recently spent some time talking with the people who did this, and they said, “We want to be an open-source player. We want to solve a lot of the Arab, Arabic content issues.” Makes perfect sense. “And we want to use this to build businesses in our country and neighboring countries that make sense.” Very reasonable.

The product is called Falcon. It’s a good example of this technology not being unique to the US. The obvious question is: How did they do such a good job? And the answer is that they had plenty of money, they had plenty of good hardware, and they must have brought in some talent from outside their country.

Solomon: Super interesting. This has been a boon for manipulated media with deep fakes. How are we going to deal with this? How is society going to adapt to this?

Schmidt: I proposed that laws be changed to require social media companies to know where the content came from by watermarking who submitted it or recording them using digital technology, publishing their rules, and holding them to their rules.

In other words, if a company says, “We allow anything,” then that’s okay. If we don’t allow X, then they have to actually implement, “Don’t allow X.” All very sensible, in my view. I am pretty skeptical that anything will change in the next few years until there’s a terrible crisis because there are too many power centers to make it happen.

I hope that the well-run companies will be under enormous pressure to not have bad speech or misinformation, and they actually police that. The trends are against my point of view. If you look at Twitter, the Trust and Safety Group has been eliminated on Twitter.

At Meta, they cut their Trust and Safety Group down by a factor of two for reasons I don’t fully understand. The Google people I’ve talked with have a pretty significant AI Trust and Safety Program, which I reviewed.

Hopefully, a few of the good companies—I assume Microsoft is doing the same thing—will then put at least reputational pressure on the others to catch up.

There are plenty of people who either hate elites, hate the government, hate democracy, or are funded by the Russians. There are plenty of people who have nothing to do but spread misinformation.

They wake up in the morning. They write computer code, and they say, “Out you go. Out you go. Out you go.” And they manipulate it. So it is a contest between the people who want to manipulate an outcome and the proper host. And remember, it’s not just evil national security people.

There are also businesses that will fund counterintelligence campaigns. There’s evidence on both sides in America that politicians are going to start using these things in various forms of misinformation.

Solomon: Let’s look on the glass-half-full side. What’s one thing you’re excited about that you want to leave us with?

Schmidt: Let’s talk about AlphaSense. What AlphaSense did is take very special data out of Goldman and made it available to all of their customers in a very powerful way. It made them smarter. It’s also something that Google couldn’t have done because the information wasn’t generally available. I like these systems where the computer is working with the person to make them smarter.

I defy you to argue against making all the humans in the world smarter. We’re just going to be better with a more liberal and tolerant society where people have ideas and people can frame things.

These tools in aggregate, accelerate the rise of prosperity, not just in America, but worldwide.

Solomon: That’s been the story of technology.

Schmidt: The quicker we can get everybody up to our level of sophistication and approach, the safer the world will be.

The above conversation concludes the third part of our three part blog series. You can read part one on the future of genAI in business and part two on what’s next for genAI.

If you’d like to read the entire interview now, you can download the full report on the future of generative AI in business. If you’d rather listen to the full interview while you work or on your morning commute, access the on-demand webinar here.