Today, generative artificial intelligence (GenAI) has integrated into nearly every industry, ranging from healthcare to banking, retail, and even manufacturing. The fast adoption of GenAI across sectors is due in part to its applications, which now span core functions in marketing and sales, product development, engineering, supply chain and operations, and support departments like IT, legal, talent acquisition and HR, strategy, and finance.

As GenAI continues to revolutionize how business is conducted around the world, promising a slew of benefits (i.e., optimizing workforce efficiency, cutting expenses, accelerating research and development. etc.) for individual contributors to executive leadership, shareholders, and beyond, industry analysts are bringing a critical lens to the subject of its application.

Proponents have lauded the potential applications of Gen AI as a way to solve some of the world’s biggest problems—from employment, creditworthiness, and even criminal justice. But, due to GenAI’s quick overtake, there’s virtually no national government oversight over how public or private companies wield this technology. What’s potentially more troubling, though, is the lack of federal and legislative intervention to ensure these programs aren’t encoded, consciously or unconsciously, with structural biases.

This gap between application and regulation begs the question: how do we ensure the ethical and moral considerations of GenAI in our automation-driven world? Where do the boundaries of its application end? And can we build better, unbiased algorithms?

Using the AlphaSense platform, we dive deep into the state of GenAI, where its capabilities fall short, and how we can overcome obstacles of moral and ethical obscurity.

GenAI: Current and Future State

At its most fundamental level, GenAI is a branch of artificial intelligence that can generate images, videos, audio, text, and 3D models by studying existing data and using the findings to produce new, original data. The results? Highly realistic and complex content that can mimic human thought, creativity, and speech—making it a valuable tool with boundless use cases across a myriad of industries.

To date, GenAI is being leveraged to help engineers develop 3-D designs, assist data scientists in writing code and documentation, and enable developers to debug and propose code iterations for prototypes of web applications. In the world of law, GenAI can draft and review legal documents. And for startups and companies looking to cut down on costs, GenAI can write marketing and sales content, create product and user guides, or analyze customer feedback by summarizing and extracting themes from customer interactions.

There’s no shortage of excitement around future use cases for GenAI. And yet, some of its current applications have already drawn sharp backlash.

ChatGPT and GPT-4, biproducts of largely consumer-focused GenAI that utilize interfaces from OpenAI, are capable of holding conversations, answering questions, and even writing low-grade high school essays. However, this past February when the New York Times published their troubling conversations with Bing’s chatbot, Sydney, which sent messages that ranged from promoting infidelity to expressing a desire to be “alive.”, speculation arose concerning where these tools are pulling their reference data from.

Similar concerns have been brought up about DALL-E 2, Open AI’s system for creating “realistic images and art from a description in natural language.” DALL-E 2 generates pieces of artwork that replicate old masters in technique and intent. But like most GenAI systems, DALL-E 2 is pulling and creating “unique” artwork from pre-existing content on the internet—inciting legal discourse around compensation for these pieces of art and breaking copyright law.

But even with these problematic occurrences sprouting up in the earliest applications, experts expect GenAI to continue its takeover.

Outside of chatbots, experts predict that GenAI will reshape the job market for a large subset of professionals. According to Forbes, roles that heavily involve performing tasks mainly behind a screen (i.e., programming, data science, and marketing) will likely be replaced with GenAI software and, consequently, will experience a decline in demand and salaries—excluding positions that involve implementing GenAI itself.

Contrarily, positions that depend on interpersonal skills, creativity, problem-solving, and emotional intelligence (i.e., consulting, sales, and people management) are at less risk of succumbing to automation and could see higher market demand and salaries.

While tech leaders are hyping up GenAI’s streamlining capabilities, conversations around automation and the role it will play in our society are running rampant. Ultimately, it’s a question of how much we should be relying on GenAI and what the consequences of doing so are.

“Generative AI, for all its promise, is likely to further polarize an already divided job market,” according to Forbes. “High-value, knowledge-based jobs that will be necessary in the era of AI require a depth of understanding and technical skill that not all possess, leaving many struggling to find a place in this new world.”

Related Reading: The Evolution of AI: Trends to Watch in 2023

Ethics in AI-Algorithm Configuration

An artificial intelligence system relies on an algorithm to generate answers or make decisions. But how these results are produced brings into question three areas of ethical concern for society: privacy and surveillance, bias and discrimination, and the role of human judgment.

In the case of leveraging AI to find workplace talent: if an algorithm is configured and deployed thoughtfully, AI resume-screening software should produce a wider pool of applicants for consideration and minimize the potential for human bias. However, some experts believe that AI mimics human biases, and further, relies on them to establish “scientific credibility”. In other words, artificial intelligence translates prejudice and judgment into objective truth—a mishap that, if unaddressed, could lead to history repeating itself.

This is especially true in lending circumstances. Machines relying on AI algorithms to output decisions will likely be fed and learn from data sets replicating many of the banking industry’s past failings, including the systemic disparate treatment of African Americans and other marginalized consumers. “If we’re not thoughtful and careful, we’re going to end up with redlining again,” Karen Mills, former head of the U.S. Small Business Administration, says.

The core of an unbiased algorithm then starts with the content sets it’s based on. Vetting data sources that serve as the foundation for an AI system’s knowledge becomes critical to ensuring reliable results or decisions.

Building Trust in GenAI with Vetted, Quality Sources

While GenAI iterations like ChatGPT, Bing Chat, or Google Bard are on the cutting edge of this technology–with their predictive and creative abilities–the datasets from which they learn (i.e. the internet at large) are rife with problematic and incorrect information.

In Wired’s words, “these chatbots are powerfully interactive, smart, creative, and sometimes even fun. They’re also charming little liars: The data sets they’re trained on are filled with biases, and some of the answers they spit out, with such seeming authority, are nonsensical, offensive, or just plain wrong.” The bottom line: using GenAI for lowstake circumstances (i.e., generating a recipe or trip itinerary) is reasonable, but relying on it to make business or financial decisions is entirely problematic.

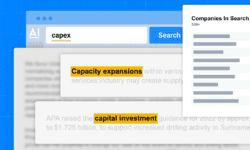

Unlike other generative AI tools that are focused on consumer users and trained on publicly available content across the web, AlphaSense takes an entirely different approach. As a platform purpose-built to drive the world’s biggest business and financial decisions, our first iteration of Gen AI technology—Smart Summaries—leverages our 10+ years of AI tech development and draws from a curated collection of high-quality business content.

Smart Summaries provides instant overviews of multiple perspectives on a topic—from earnings calls and Wall Street analysis to interviews with industry experts—allowing users to quickly hone in on the exact information they need, and easily verify that information from the source material itself. Here’s how Smart Summaries is different than the consumer-grade generative AI on the market:

- Built for business & finance: our decade-long investment in AI has allowed us to quickly develop technology built specifically to hone in on financial and business information, capturing insights with reliability and precision – and without hallucination.

- Trustworthy sources: our generative AI draws on a content database filled with top-tier business and financial content, meaning our users can be confident that the summarizations come from the most trustworthy and relevant sources.

- Easy auditability: Users can easily check the original content for context and validation with one-click citations—a critical capability for our customers who are relying on the insights that we deliver to power million-dollar decisions every day.

With AlphaSense, you’ll never risk using biased, unreliable data to guide your strategy and beyond. Learn more about Smart Summaries here.

Check out this case study to learn how ODDO BHF, one of the largest private banks in Germany, used AlphaSense and its genAI capabilities to streamline insights and get the competitive edge.

Read the full press release here about our Smart Summaries and start your free trial of AlphaSense today.